I am thrilled to release the second edition. What is the cycle of this newsletter? Is it early? Is it late? The important thing is that we have made it to a second edition, which puts it in the top percentile of newsletters *.

* I made it up

General Application Security

Dynamic Bambdas Generator

Kelsey Henton (a member of my team) recently published a Burp extension to automatically generate Burp Bambda filters based on a set of parameters you would like to investigate within your history. You can find it here.

Web Security Slop

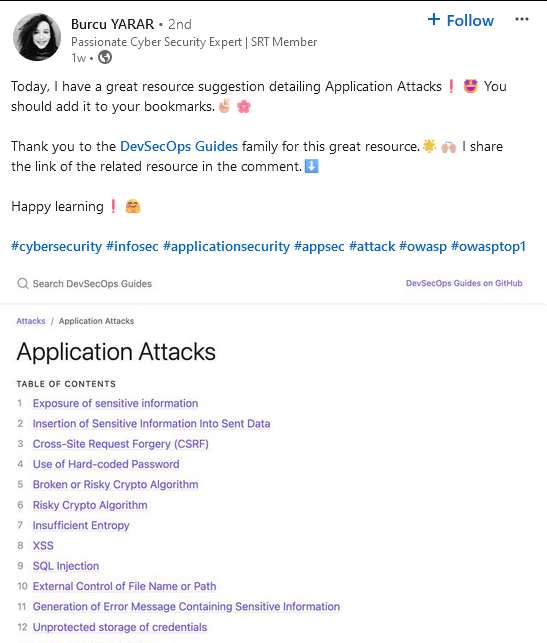

Recently, I’ve noticed a significant decline in the quality of web security content that makes its way to social media and this appears to be accelerated by the use of LLMs. Here is a recent post from LinkedIn:

🤗🤗🤗🤗🤗🤗🤗🤗🤗🤗🤗🤗

Before even looking at the content, I know this is going to be terrible: the post’s style, the owasptop1 tag, the table of contents that has no structure and includes topics that are not “Application Attacks” at all. The post has hundreds of likes and an uncanny comment section. Is this all just AI content for other AIs to comment on?

Digging into the linked document, it took little effort to confirm that an LLM was in the loop here. Just look at this recommendation for mitigating CSRF:

Want to prevent CSRF? Simply don’t introduce vulnerabilities.

My position is that the existence and propagation of this type of content is bad for the industry, but I am not sure what the solution is and I don’t quite understand the incentives at play. Is this all in the pursuit of LinkedIn clout?

Standards and Discussions

The Limitations of Subresource Integrity

Not familiar with SRI? Read this. Here is why it kind of sucks:

Automation can undermine its value.

There exist anti-patterns that can nullify protections of SRI.

I keep encountering CDNs (granted, smaller ones) that do not support CORS to permit SRI (see here for why this is a spec requirement). I won’t expand on this one.

I think [1] above should be obvious. The intent of SRI is to only load trusted resources. In theory, resource content has been vetted by consumers to some extent, but I am sure that is rarely the case. With the drive for faster development cycles and automation, this can be further undermined in practice. I think there is good potential for research in this area.

From above, [2] is the interesting one that came to my attention from a recent assessment. Here is the problematic snippet of 3rd party JavaScript:

var embedUrl = 'https://' + third_party_domain + '/embed.php?';

var xhr= new XMLHttpRequest();

document.addEventListener('readystatechange', function(event) {

if (event.target.readyState === 'complete') {

xhr.onreadystatechange=function() {

if(xhr.readyState == 4 && xhr.status == 200) {

var content = xhr.responseText;

if(root) root.innerHTML = content;

}

}

xhr.open('GET', embedUrl, true);

xhr.send();

}

});The issue here is that the script loads content from embedUrl (pointing to another third party resource) and uses innerHTML to insecurely add it to the DOM. So in this case, SRI does not entirely mitigate risk of resource provider compromise. While the attacker cannot viably target the protected script, they can still conduct a supply chain attack by modifying embed.php to include malicious content.

So what is the solution? Content Security Policy (CSP) in a sufficiently strict configuration can be used to provide the protections offered by SRI and simultaneously mitigate this type of attack. In practice, however, strict configurations are rare to see due to the implementation challenges.

From a testing perspective, it’s a good idea to consider whether content missing SRI will actually benefit from it.

AppSec Teams

Check Out My Talk!

My talk from OWASP AppSec Lisbon is up on YouTube: Building An Effective Application Penetration Testing Team.

Training Programs

Web security training content and programs exist in abundance. Much of the content is terrible, but there is plenty of good content as well. I was recently asked why I put so much effort into building and delivering my own training when it may often seem like a duplication of effort.

I should define what I hope to achieve with a training program. Because formal infosec/cyber education is still quite rare, I assume that trainees enter our program with minimal to no domain expertise. Ideally, they are proficient computer users with some development experience. The goal of the training program is to produce well-rounded application security experts who excel at security testing. This requires coverage of a wide range of topics, the development of technical skills, the development of non-technical skills, and the means to assess knowledge and skills. Additionally, trainees should develop proficiency using our organization-specific tooling, processes, and practices.

Let’s examine one of the better examples of online training for web security testing: PortSwigger’s Web Security Academy. Here is what I really like about the Academy:

It’s freely available! This isn’t just helpful in making this type of training content accessible to people without the means to purchase it elsewhere, but it also allows for the opportunity to audit it (as we are doing here to some degree).

It includes relevant assessments (the labs).

It is heavily focused on practical security testing.

It utilizes one of our primary testing tools.

Bonus: the actual certification associated with the program only costs $99 (well, if you have access to Burp Suite Professional). This is fantastic value compared to the many far worse certifications that also require payment just to get access to training material.

Here is what I consider to be missing from the Academy if it were to be used as a sole training program to prepare our application security specialists:

There is an absence of security, development, and general tech fundamentals. Even for many of the web security topics covered, some are not fully comprehensive.

There are missing security testing topics.

There is no consideration for the non-technical processes and skills, including reporting, communication, and some of the technical and ethical issues that arise from security testing.

There is no significant consideration for developing and following a comprehensive methodology to test in practice.

Related to the above, there is no evaluation of skills from a procedural perspective.

Training does not occur within the context of our organization’s specific processes and methodology.

The assessments are very limited (I will write about this specifically another time).

These are not necessarily criticisms of the Academy, just limitations when trying to utilize it to build a comprehensive training program. So how did I build our training program? I am glad you asked.

The first step required building a comprehensive curriculum structure/outline. What are all the topics that must be covered? What skills must be learned? What are all intended learning outcomes? How will trainees be assessed? How will trainees be given usable feedback? I used a mind map tool for the initial stage.

When I started on this journey, I was surprised to find that no one had published a comprehensive application security curriculum. There was an effort through OWASP that I tried to get involved with, but it was effectively derelict at the time (though there is a renewed interest!).

I divided the curriculum into modules and tried to identify a structure, tagging prerequisites and using a tiered approach similar to a post-secondary program divided across terms. For each module, I identified essential readings/exercises, summarized relevant information for teaching, and created practical examples where appropriate.

For key topics, I delivered lectures which were recorded and saved in an accessible training archive. While much of the content I delivered was not necessarily novel, I think this approach was much more effective than pointing trainees towards an existing article or YouTube video. Here is why:

I could tailor the content as appropriate for our team and organization.

Running internal training provides more opportunities to interact with the team and assess their progress/challenges.

Live training is simply more dynamic and engaging.

Scheduled live training is more likely to be attended/viewed and capture attention.

As I was delivering the training, I was motivated to provide accurate and useful information, which drove me to greatly refine the content and learn things myself in the process.

Finally, this experience helped me become a better teacher/trainer, and there is value in that.

Ultimately, this is an endless iterative process. I have a large number of modules constantly evolving as I encounter new information. I have delivered a small fraction of these, but I am going to keep at it. Eventually, some content will make its way to the public domain as I do think that this is an area where we need to collaborate more openly as an industry to develop standards and expectations.

Science and Security

LLMs Are Not Going To Do Science For Us

I have watched language models warp many minds as they promise boundless knowledge to those who can wield them. If you could provide this power to someone with no understanding of the context and tech behind LLMs, it could very well drive them mad. Then again, even people who ought to understand how these systems work or what they are capable of do not seem to be handling them with care.

As a newsletter enthusiast, I have been subscribed to Daniel Miessler’s newsletter from a time before the popularization of LLMs. It was a simpler time. Since then, I can only describe the content as increasingly LLM fan fiction. I will never unsubscribe, however. I need to see what is coming next.

I decided to write up this section because science is an interest of mine and I want to bring more empirical methods to application security. So naturally, Miessler’s latest project that hopes to automate science with “AI” (among other things) is of interest.

Here is an excerpt:

I could write in great depth about this, from the misunderstanding of what the actual challenges in science are, to the overconfidence in LLMs in being able to address them (or at least, eventually address them). I think it’s especially funny to imagine PIs sitting around trying to think up ideas to explore.

Like any system, the scientific process and economy is driven by the incentives and structures that enable it. While it may be hard (in some cases) to design robust experiments, a key problem is that it is often acceptable to simply not employ a rigorous methodology, leading to the unfortunate present reality where the vast majority of papers published in scientific journals are of low quality and a majority could be false.

Consequently, scientific literature used to train LLMs will mostly be garbage. I am not familiar with what literature sources modern LLMs are trained on, but if they exclude paywalled scientific literature, they are even less likely to be trained on actually rigorous science (unfortunately, many top tier journals continue to be closed access).

If there is an immediate use for LLMs in creating scientific output, I would wager they can be used to accelerate the pace of publication of low quality papers, further polluting the scientific ecosystem with more useless papers in predatory (or borderline) journals. Unfortunately, there is some evidence that LLM use is becoming widespread across scientific literature already, but I have not seen an analysis of the quality and impact.

So no, I am not buying the automated “hypothesis to results” AI pipeline. As with any claim, I can always be convinced to change my position. I just need to see the evidence. Based on the present literature investigating LLM use cases, I do not expect rigorous science any time soon.