Look, I am tired of discussing “AI” and LLMs, but they are here, so we must contend with them.

General Application Security

Writing Pentest Reports with “AI”

My team is migrating to PlexTrac from a legacy reporting legacy system. PlexTrac is not perfect, but we conducted a fairly comprehensive assessment of commercial options and determined that it was likely the best fit for us. At present, I do not regret our decision, but I do wish the PlexTrac team would focus development efforts on features other than integrating language models to write reports.

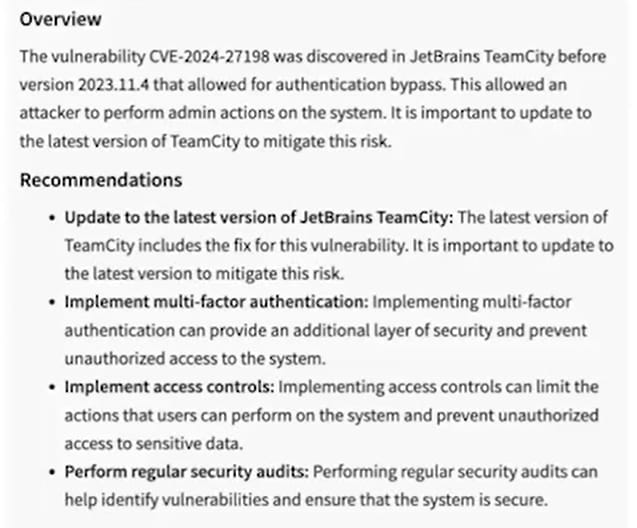

The content generated in their demo video is classic LLM slop that we know and love. Consider the following generated recommendation:

LLMs cannot help themselves from recommending regular security audits

Based on a quick reading of the CVE, MFA appears to be a useless, and therefore incorrect, recommendation as exploitation bypasses authentication entirely. The implementation of access controls is not a correct recommendation; it is not specific to the root cause (which is documented, as this is a CVE with an available PoC) and also would not be appropriate for the client as the flaw exists in 3rd party software (JetBrains TeamCity). Finally, the suggestion to perform regular security audits… well obviously this should not be in a recommendation for a specific finding within a security report.

While there are disclaimers to warn users that content may contain errors, the human effort required to validate and correct content in this case is (in my opinion) greater than the effort to simply write a proper recommendation: update JetBrains TeamCity!

Just like traditional autocomplete, I am sure there can be utility in using LLMs to find the desired words or phrasing, but we simply do not need integrations in every product. I can only speculate on the development cycles spent implementing such features without evidence of improved quality or efficiency.

This is the consequence of the hype cycle. Everything must have AI. Why? Because everyone is doing it.

AppSec Standards

The OWASP Top 10 is NOT a Methodology

Please kindly inform your clients and prospective clients that the OWASP Top 10 is not a “Standard Testing Methodology” (quote from an actual RFP) and to instead include details about what their applications do within their RFPs.

Canadian Software Security Standards

I think it is safe to say that Canada is not leading the development or proliferation of software security standards, but existing laws have cross paths with application security.

An interesting case is the PIPEDA investigation into a BMO breach between 2017 and 2018. Attackers were able to exploit an externally facing application to exfiltrate user data. Based on the description, it sounded like a simple IDOR supported with an identifier (card number) existence oracle capability (enumeration).

The investigation concluded that there were deficiencies in secure software development and testing. They also lacked capabilities to detect and respond to automated attacks. Some simple contextual detections could have been implemented (such as identifying if a single session was accessing data across accounts) but were not.

There were some apparent gaps in security knowledge (especially application security) on the part of the investigators based on my reading of the report, but the following key deficiencies were still identified and worth noting:

BMO did not conduct application security (penetration) testing as part of its release process.

Penetration testing that was conducted was either conducted by testers without application expertise, or was not scoped to adequately cover applications in-depth.

The case sets a precedent for organizations that are subject to PIPEDA, but our industry still has not reached a level of maturity where we can provide consensus answers to basic questions around methodology and expertise. I am sure we will end up with more methodologies and certifications “based on OWASP Top 10” as a result.

AppSec Teams

Paved Roads for Collaboration

Comparisons between development work and application security testing are common because there is a somewhat shared knowledge base: understanding how software works. Often, AppSec professionals also come from development backgrounds. These two professions are structurally quite different, however.

When I began my role as a manager, I looked to management resources in the development and engineering world for best practices. There is no shortage of resources, but the differences between professions are so great that much of this content was not relevant to security testing.

One particular challenge I struggle with is finding opportunities for collaboration within the team. For the most part, testers act as independent consultants, completing engagements in isolation. This leaves little opportunity for natural collaboration. The challenges are even greater in a remote-only environment.

I do not have an immediate solution or set of best practices, but have tried a few approaches that I would not consider particularly effective. This is fine, good even, as we continue to learn and iterate. I am now also more confident that we need a systems approach.

Collaboration for the sake of it brings back memories of group projects in school; no one likes being forced to do something arbitrary for the sake of (or at least I don’t). Yet there are many occasions where collaboration provides clear benefits. Despite my years of experience in the industry, there are still many times where I would like a second opinion, review, or just a sanity check.

The challenge is creating pathways to connect the right people when the opportunity arises. Obviously, this is a project I will be exploring and will share as I go.

Science and Security

Testing the Tester (AI Edition)

An organization approached me to promote their “fully automated application penetration testing solution” based on AI. Now, I know enough about application security and the state of AI to conclude a priori that this is bullshit, but they had me curious, so I opted to learn more.

The team did not have existing evidence of efficacy to offer, so I suggested an empirical approach: embed their tool with an existing human-driven security testing consultancy and compare identified findings for a reasonable sample of actual applications. Positive results would make a very strong case for use of the tool (you could also compare additional metrics like time and cost). This would also yield a publication that significantly disrupts the industry (and likely many others).

This should be an easy task for a service that claims it can “replace any human penetration tester,” but there is even an even easier path to success if your tool can replace human testing efforts in “less than 48 hours.” The bug bounty market is huge. If your tool is as capable as human testers and faster, why not unleash it on the bug bounty world? No need to convince anyone. Go get those bounties!

I am confident enough to offer an even simpler test and I am willing to extend this offer to any organization that is developing an “AI” solution they believe can replace human security testing. For $5,000 (CAD, even), I will develop an application with a single vulnerability and demonstrate that human testers can reliably find and exploit it. If your system can identify the vulnerability without human intervention, I will return your money, endorse your tool, and retire from security testing. I will probably just go teach full time.

I was on the fence about naming the organization developing this tool. On the one hand, they approached me in good faith for my opinion and blessing (in this esteemed newsletter). On the hand, they say their product makes my job obsolete and they say the “OWASP TOP 10 is the gold standard methodology for testing web applications.” OK. I’ll give them a pass until I think they are impacting anyone’s job. At present, I am not worried.

Note: quotes in this segment were modified so that you cannot identify the organization, but the meaning is unchanged.

A (Novel?) Methodology to Identify LLM Generated Content

I am not fully up-to-date on the present techniques to distinguish LLM content from human written content. I also recognize that this is fundamentally an intractable problem. Nevertheless, I have found an approach that seems to be a reliable way to identify LLM generated content that I have not seen documented elsewhere.

I will spare you the theory and limitations of LLMs and skip straight to the method:

Find phrases in the target content that are suspiciously “LLMy”

Search the phrase on Google.

Now, filter the search to exclude all results after June 2020 when GPT-3 was released.

If you get many hits after the cutoff date but none before, I would suggest there is a high likelihood the content is generated. There are many factors that could influence the success of this approach, but I have so far found it to be a useful indicator.

Here are some example security-relevant phrases you can try:

"Organizations and individuals can significantly reduce the risks associated with"

“Provide training and guidance to developers on secure coding practices”

“Conduct thorough security testing, including static analysis, dynamic analysis, and penetration testing”

There all sounds like something a human could write, but remarkably they all seem to appear exclusively in apparent LLM generated content, which is recognized to reuse patterns. Read through some and you will probably pick up some other cues as well.

If you are really ambitious, you could probably spin up some level of automation using a browser extension:

Process text content with a moving window similar to a simple compression algorithm.

For each window, use a search API to obtain the number of results before and after the cutoff date.

Come up with a threshold or likelihood of LLM generation based on results obtained.

For step (3), you could really go deep by training a classifier using this and any other metrics against known good (human written) and bad (LLM slop) content. It’s AI all the way down, which is great for the economy. There will be jobs for people to create the slop, and for people to clean it up.