There is a major hidden cost to the increasing adoption and integration of LLM capabilities in every software platform: other features and bugs are ignored in pursuit of capitalizing on the hype. While I do think there will be effective use cases for LLM assistance in security testing, tool vendors implementing their own flavour instead of improving the core capabilities of their software is definitely not the right approach.

General Application Security

RIP PortSwigger Support Threads

My favourite experience on the dying internet is searching for an issue I have with a software product, finding a link to a thread on exactly the right topic, and then finding that the link is now directed to a generic support page. This is what Broadcom recently did to VMware support (though maybe they resolved it?) and it seems to be what PortSwigger did to themselves.

Forum links now redirect to a generic support page (due to my slow pace of publishing, it appears that some forum links have now been de-indexed from Google). Great, thanks folks. This resembles the classic trajectory of support enshittification: make the user click and read to exhaustion before they are even able to create a support ticket or engage in forums.

Burp’s Scanner Needs Work

What are they really doing at PortSwigger? Oh yeah, AI stuff (“Computer, please copy and paste <script>alert(1)</script>”). You know what doesn’t need AI to achieve meaningful improvements? Burp’s automated “Audit” scanner. This is a tool I am really rooting for, but it just does not receive the development love it needs, even as PortSwigger leans into their DAST offering.

I have been meaning to closely examine Burp Pro’s scanning capabilities, aiming to determine what is tested and what is not and to use this info to optimize scanning with Burp. Using the default Audit policy, the scanner made 226 requests as part of an active scan when targeting a specific payload position via Intruder. When examining these requests, only 87 of these actually inserted a payload specifically into the target position. I knew there was extraneous traffic included in the scan process, but I didn’t think it was this bad. A full 44 of the requests were just probing for unrelated GraphQL endpoints via the URL path! Why would you ever want this when running an endpoint-specific or target position scan?

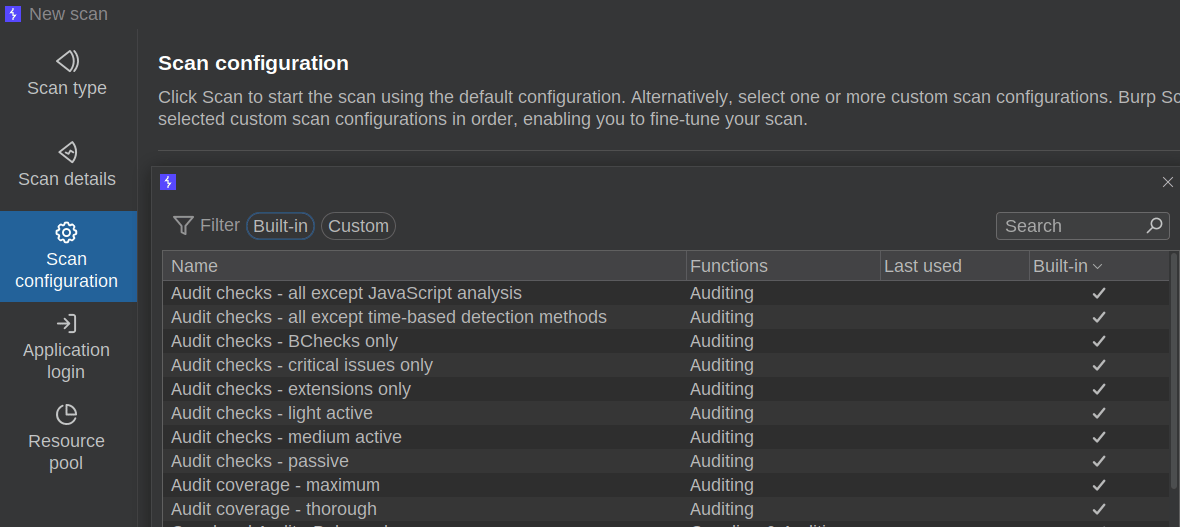

To improve our scanning and avoid the default situation where most scan traffic is unnecessary (and potentially out-of-scope), we need to look to Burp’ scan configuration. Unfortunately, no default scan configurations for Audit checks are particularly useful for targeted scanning.

Is anyone using these default configurations?

To improve scanning for these use cases, you have to create a new Auditing configuration. Unfortunately, the configuration interface for selecting desired test cases is also quite poorly designed. The appropriate section is called “Issues Reported” but is better understood as the test cases conducted. You can select individual test cases, but there is no clear indicator of which test cases will produce payloads in specific payload positions, which ones will be request/endpoint-specific (like HTTP request smuggling), and which ones are effectively host-level (like guessing URL paths).

The best you can do is infer what test cases may be used based on the issue name.

I made a moderate effort to create a configuration designed for positional scanning (via Intruder’s “Scan Defined Insertion Points”) and you can find the results on my Github here. You can import it from “Scan configuration”.

As preventing unwanted scan traffic is such a challenge, it should be no surprise that adding custom test cases is also a major challenge in Burp. The traditional approach to doing so is by creating an extension, but this bring many challenges in terms of overhead, maintenance, and so on. There are a number of existing extensions designed to act as universal custom check interfaces (like Burp Bounty), but these are all severely limited in their capabilities.

The existence of extensions like Active Scan++ and Backslash Powered Scanner (both developed by PortSwigger’s James Kettle), is further evidence that Burp on its own is not sufficiently customizable to implement robust scanning without the use of extensions. While these are great extensions, the extension-driven approach to augmenting automated scanning results in a fragmented and messy ecosystem with poor capabilities to understand and configure scans holistically. Further, innovations implemented in these extensions (like Kettle’s detection logic) are isolated and do not expand the scanner’s detection capabilities universally.

Recognizing the need for a type of universal scan check interface (and surely inspired by Nuclei), PortSwigger implemented BChecks. This was a fine idea but a poor implementation. My team and I made a few attempts to implement missing scanning capabilities as BChecks, but very quickly ran into limitations. The collection of community BChecks is also a complete mess.

With the increasing integration of Bambdas – a direct interface to Java and the Montoya API – it’s a bit of surprise that PortSwigger has not deployed a more capable interface to augment scanning dynamically with Bambdas. Perhaps they will, but as-is, my team is in search of what the future of our scanning will look like. Unfortunately, it is looking less and less like Burp Suite will provide the solution that we need to be effective app testers.

Another Burp Workaround

Virtually every web app engagement provides an opportunity to discover a limitation within Burp. I didn’t plan for this newsletter to minimize the good work PortSwigger has put into the platform, but the various limitations and missing features are particularly frustrating when so much effort is placed into AI capabilities that remain underwhelming and restrictive (just check out PortSwigger’s own Discord for even more issues users are having).

An essential task of a thorough test is to review not just the findings resulting from scanning, but to review the actual traffic from test cases. This practice can uncover interesting responses and potential vulnerabilities that are not caught by the scanner (see my complaints above about scanner limitations), which often relies on narrow success criteria, such as regular expressions, attached to specific test cases.

Unfortunately, reviewing ALL traffic from automated scans is impractical, so we still need a mechanism to filter interesting responses. PortSwigger’s James Kettle has actually implemented some useful logic to identify interesting responses, but this functionality is not native in Burp, has limited documentation, and remains isolated within extensions like Turbo Intruder.

Until now, I have taken a simple, but limited and inefficient approach. I examine Logger traffic associated with scan tasks and look for unique responses based on response length or other properties associated with the response. What to look for will typically be unique to the application or the specific endpoint targeted. There is an extension designed to identify unique responses globally that I do sometimes use (Response Overview), but its scope is often overly broad and its uniqueness logic is still far too simplistic and generic to be effective across applications.

To save myself the effort of scrolling through Logger history to look through all responses, I decided to implement a Bambda to filter history to display only responses with unique sizes. In order to do this, there needs to be a stateful mechanism to track which response lengths have been seen, displaying only one of each length. Unfortunately, Bambdas work per request-response pair and have no contextual state mechanism.

Luckily, a Java interface is exposed, so there are a number of options available to us. For a workaround, I used the Java Preferences store to add a key for each unique response length, persisting the execution of each Bambda iteration. Annoyingly, because the scope of the Prefs store is app-wide, this needs to be cleared before each use to function. You can find scripts to accomplish both tasks on my GitHub.

Vibe Coding

I have been experimenting with LLM-generated code for projects larger than simple one-page scripts. My initial results are very promising for the job security of appsec folks; anyone can quickly create working applications of limited scale/complexity in the most insecure ways possible.

My latest creation had the following flaws built into the first iteration:

Missing access controls

Several vulnerable packages were chosen, including one with a critical Zip Slip vulnerability

An insecure CORS configuration (origin reflection + creds despite the app not needing CORS at all…)

In addition, the generated approach to certificate management required running the application with elevated permissions on the server, so there was a very promising vector to RCE as root.

“Vibe coding”, or whatever “coding with AI without knowing how to code” is called, is as of today a recipe for disaster, if you’re building anything that’s not a quick prototype.

We are beginning to see the consequences of this approach, but favourite new genre is LLM-generated content advising how to fix LLM-generated content. You would think these risks are worth taking for the productivity improvements, but the performance impact remains contentious. To be fair, quantifying developer productivity is not perfect even in ideal settings and effective innovations typically outpace our ability to demonstrate their benefits.

AppSec Teams

How Not to Do Hiring

I applied to work at Canonical once. I immediately withdrew my application after seeing their first round of interview questions. You can read about the full process in this post that has reignited the discussion of how ridiculous the Canonical interview process is. In addition to the pseudoscience, the process is just disrespectful. I mean no offence to the people working at Canonical, but the traits that the process selects for are likely not the ones intended.

Connect

Respond to this email to reach me directly.

Connect with me on LinkedIn.

Follow my YouTube.